The content that you see on social media, or Facebook at least, is not as random as you may think it is. The Facebook algorithm is how Facebook decides which posts users see, and in what order, every time they check their newsfeeds.

Credit: Hootsuite.com

In the early days, when the Facebook algorithm was born, in 2009, posts were sorted based on popularity.

But, after more time passed, Facebook decided to just do what ever they could to keep Facebook users on the platform. The reasoning is that the more time Facebook users are on Facebook, the more they can be advertised to.

Right now there is a lot of negativity on Facebook. There are positive posts too but many news articles state that negative talking points are organically being supported more than positive by the Facebook algorithm.

While Facebook is free to use, Mark Zuckerberg pays the bills through advertisers paying him ad money to run advertisements on the platform.

The more time people spent on Facebook, the more money goes to the shareholders of Facebook.

Facebook's Shareholders

Mark Zuckerberg, with a net worth of $54.7 Billion dollars according to Forbes, holds over 400 million shares of Facebook, comprising a market value of around $82.2 billion.

The Vanguard Group Inc., an investment management company, holds approximately 184.0 million shares of Facebook with a combined market value of about $37.7 billion.

BlackRock an asset and investment management firm, holds about 158.2 million shares of Facebook with a combined market value of $32.3 billion.

FMR LLC, a financial services company holds approximately 123.6 million shares of Facebook with a combined market value of $26.1 billion.

T. Rowe Price is an investment management company offering portfolio management, equities, fixed income, asset allocation, and holds about 107.8 million shares of Facebook totaling a combined market value of $22.1 billion. Source: Investopedia

Facebook Algorithm Changed to Prioritize Friends & Fam

Mark Zuckerberg announced in 2018 that going forward, one of the biggest changes with Facebook was that the algorithm would be modified to prioritize friends and family posts instead of just advertisers' posts.

However, that's not what happened. Instead of seeing more communities of friends and families created, studies revealed that over 50% of increased engagement was paired with increased divisiveness, outrage and angry reactions.

At the same time, the way the Facebook algorithm prioritized content ended up supporting fringe posts on fake news from scam artists and spammers who knew how to work the engagement algorithm.

If It Bleeds It Reads

Journalism, in the news media has always had a slant for things that are negative. Despite the fact that there are also more positive topics that are newsworthy -- these are often ignored to prioritize the shock and awe and graphic violence stories.

So much so, there is even a catch-phrase that journalists use: “If it bleeds it reads.”

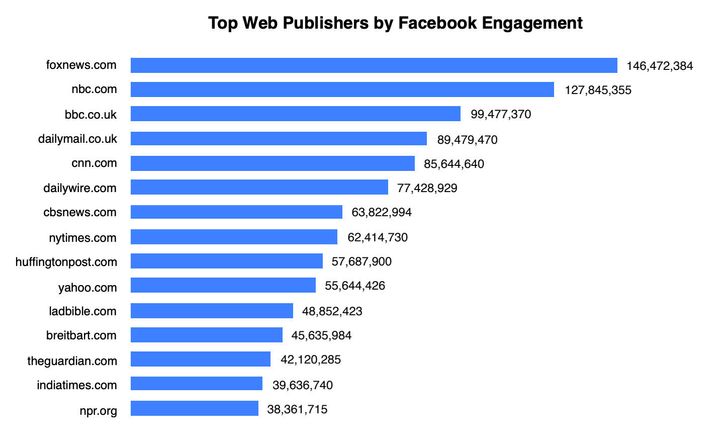

In a sense, Facebook has just allowed itself to become prey to the same ideology with one big predator creating the most amount of unhappy feelings in millions of people’s lives as they scroll the newsfeed: Fox News.

“Angry” is the top reaction when it comes to political content, though not other types of content. Fox News hired social media managers who knew how to bleed the hearts of millions through divisive negative stories on Facebook:

Credit: newswhip.com

It's clear Faux News knows exactly what they are doing, and do it well. Creating content that is negative and hateful will get more reactions, with a consistent narrative, posting schedule and relentless factory of discontented posts.

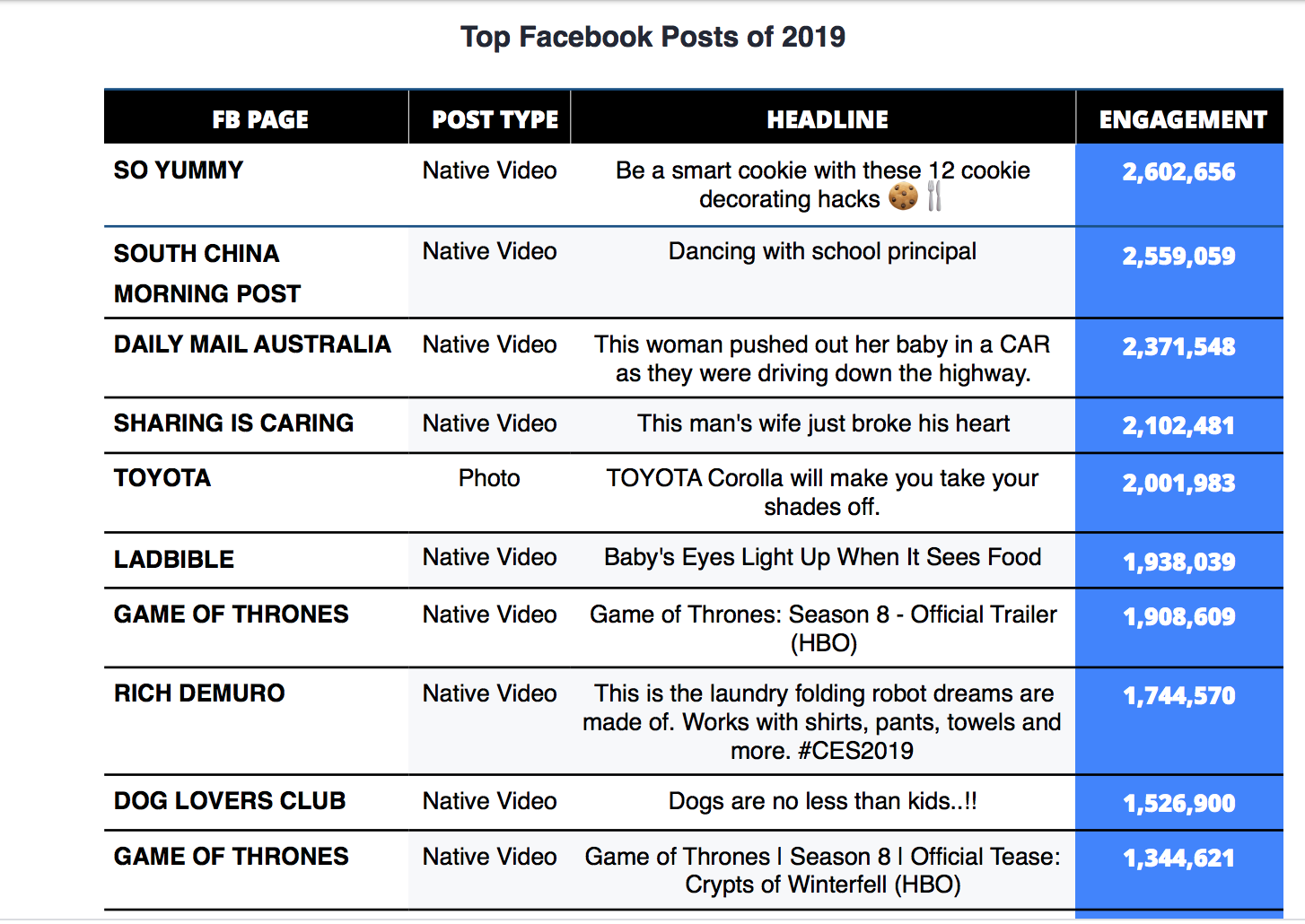

That's not the entire story though. There are plenty of other stories and posts that have gotten a high number of reactions that are positive. Posts about babies, pets, and baking cookies also have gotten incredible amounts of reach.

Over 2 MILLION engagements were drawn to the So Yummy Facebook Page's post on 12 cookie decorating hacks:

Credit: newswhip.com

So while many decry Facebook as having the intention of hurting people's feelings and spreading discontent, this isn't reality. Reality isn't one single narrative. When you subscribe to only one way of thinking you miss out on what's happening in the periphery.

The reality is, Facebook is a tool, and if bad characters spend more time on learning how to use this tool and get more adept at distributing content with higher engagement - they will dominate the Facebook Newsfeed.

Credit: Anthony Quintano

What's curious is how many people seem to miss out on the fact that reactions such as the laugh react and love react, if prioritized in high numbers also drive just as much engagement on Facebook.

The narrative that keeps getting repeated is that Facebook is only supporting negative content, when studies by NewsWhip for instance, show this simply isn't true.

NYU professor Scott Galloway, who is known for his outspoken views on Facebook - and someone whose perspective I value - has also missed this essential fact as well in a recent interview with Fast Company:

"What these companies [eg Facebook] have done is created a business model where the most incendiary, upsetting, controversial, and oftentimes false and damaging things get more oxygen than they deserve because we are a tribal species and when people say things that are upsetting we tend to engage. Engagement equals enrichment. The more rage equals the more clicks equals the more Nissan ads. So these algorithms have figured out that if you promote the flawed junk science of anti-vaxxers, it increases shareholder value. There is a population of people out there that believe vaccinations are bad, and they should be heard. But they should not dominate health news so that you start getting these stories from your friends on Facebook. It starts adding legitimacy, and then there’s a trend around anti-vaccination, and more two- and three-year-old boys and girls have their limbs amputated because of an outbreak of measles which we thought we had conquered 30 or 40 years ago."

While there is definitely a lot of truth to what Galloway speaks of, as far as accountability, fact-checking, anti-trust laws not being enforced on big tech companies like Facebook & Google - he misses the point that there is more than one narrative here.

Positive stories get distribution and crazy amounts of reactions too - just share a photo or video of a puppy and see how many reactions and likes it gets. But - as stated before, when companies who want to use negativity to drive reactions put their posting in overdrive, it will definitely also create massive social media engagement.

One headline I've read recently said,

"Facebook Prioritizes What Makes You ‘Sad’ Or ‘Angry’ Over What You ‘Like’"

and yet this is false - as reactions aside from the likes, also include the wow, laugh, love and new Care react - not just sad or angry.

Later on in the article the author says:

"...all reactions are weighted the same, which means the News Feed prioritizes things you “love” equally to things that make you “angry” or “sad.”"

But the headline stated only the negative emotions.

¯\_(ツ)_/¯

This is an example of cherry-picking info to frame a story to support a bias. A pre-existing narrative being told instead of examining the data and seeing the full picture. How you use a tool determines the emotional outcome.

What's even more disturbing is how willing the public is to accept a pre-framed narrative without investigating it, reactions become almost robotic. (Read Great Leaders Don't Create Robots for more tips on leadership).

Ironically, a lot of the trite dialogue on social media, could have been written by a computer algorithm as it's one person trading talking points they didn't think up, or research themselves with another who is doing the same thing.

People don't study anything that doesn't already confirm their existing bias, and look for things on Facebook to support this rather than research their own beliefs.

It would be great to see folks get informed beyond the 3 primary outdated emotionally charged talking points on posts shared without even googling origins of the info and fact checking with multiple sources.

Forgive me, perhaps I am biased about people who are biased, as I worked at a college instructing students on how to cross reference primary and secondary sources. Authenticating data sources is important before becoming emotionally invested in what you think is the absolute truth. But back to Facebook.

It seems like there is a lot of opportunity right now to prey upon people's fears on social media, but there are just as many opportunities to encourage hope, trust and faith that things will get better.

I've seen posts celebrating the bravery of health care workers get amazing distribution, positive reactions and countless likes.

Don't fall victim to a one-story narrative, examine all sides, cross reference sources to make sure you aren't being fed a one dimensional version of a 5 dimensional reality.

I encourage individual Facebook users, as well as companies and entrepreneurs, to use the tools we have wisely. To do our best to create networks that support authenticity, mutual respect and a narrative that promotes sustainable social networks.

. . .

Enjoyed this blog?

Signup here to get updates on new startup blogs.

Check out my Medium page here

Is Facebook not explaining why the disapproved an ad?

I worked at FB for years and offer FB Policy Consulting here

Available for freelance writing and guest posting on your blog: [email protected]

Leave a Reply

You must be logged in to post a comment.